Interactive demo: Tap screen to override the agent's decisions.

World Models

Can agents learn inside of their own dreams?

Abstract

We explore building generative neural network models of popular reinforcement learning environments. Our world model can be trained quickly in an unsupervised manner to learn a compressed spatial and temporal representation of the environment. By using features extracted from the world model as inputs to an agent, we can train a very compact and simple policy that can solve the required task. We can even train our agent entirely inside of its own dream environment generated by its world model, and transfer this policy back into the actual environment.

Introduction

A World Model, from Scott McCloud's Understanding Comics.

A World Model, from Scott McCloud's Understanding Comics.

Humans develop a mental model of the world based on what they are able to perceive with their limited senses. The decisions and actions we make are based on this internal model. Jay Wright Forrester, the father of system dynamics, described a mental model as:

“The image of the world around us, which we carry in our head, is just a model. Nobody in his head imagines all the world, government or country. He has only selected concepts, and relationships between them, and uses those to represent the real system.”

To handle the vast amount of information that flows through our daily lives, our brain learns an abstract representation of both spatial and temporal aspects of this information. We are able to observe a scene and remember an abstract description thereof . Evidence also suggests that what we perceive at any given moment is governed by our brain’s prediction of the future based on our internal model .

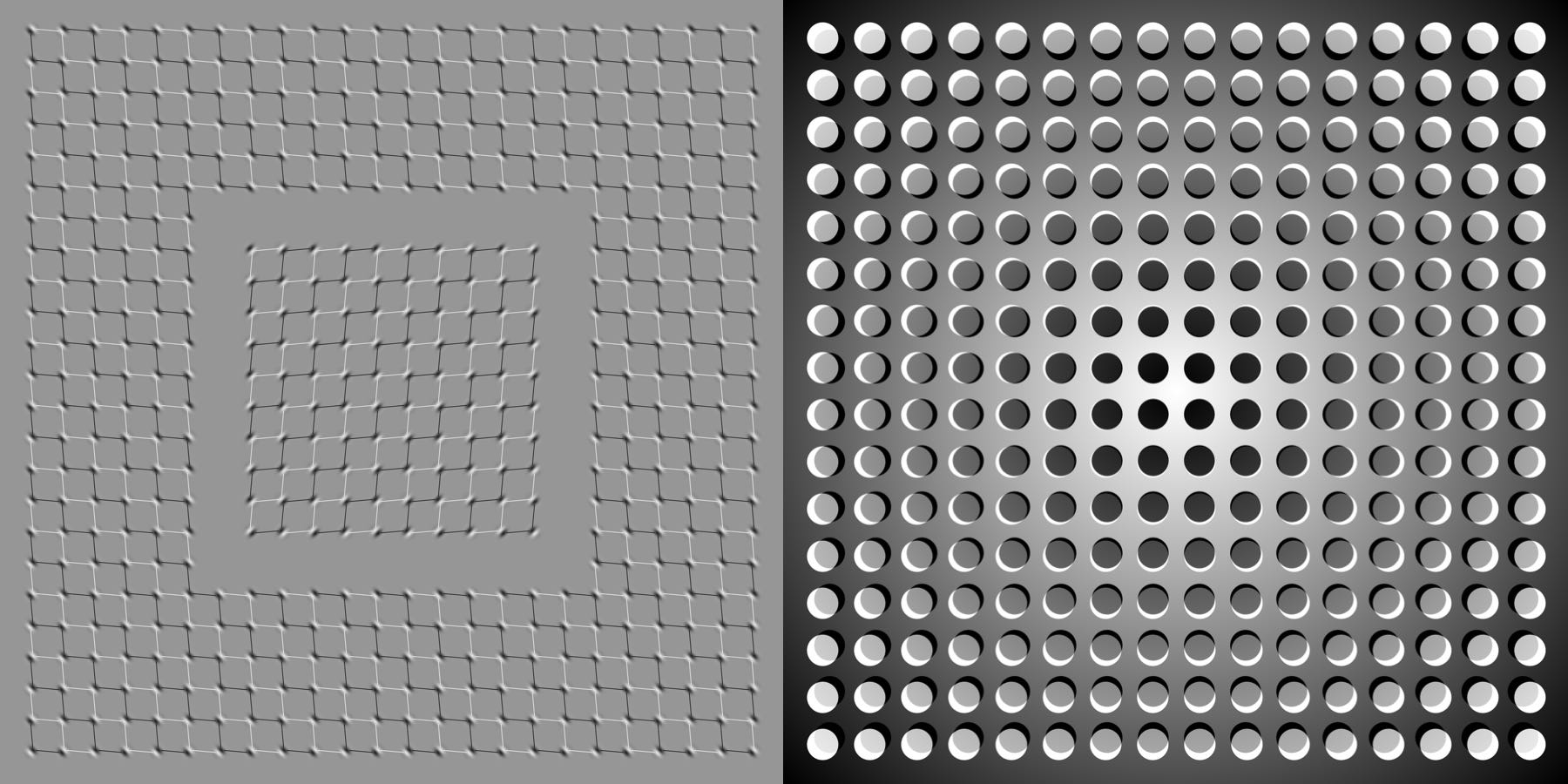

What we see is based on our brain's prediction of the future.

What we see is based on our brain's prediction of the future.

One way of understanding the predictive model inside of our brains is that it might not be about just predicting the future in general, but predicting future sensory data given our current motor actions . We are able to instinctively act on this predictive model and perform fast reflexive behaviours when we face danger , without the need to consciously plan out a course of action.

Take baseball for example. A baseball batter has milliseconds to decide how they should swing the bat -- shorter than the time it takes for visual signals from our eyes to reach our brain. The reason we are able to hit a 100mph fastball is due to our ability to instinctively predict when and where the ball will go. For professional players, this all happens subconsciously. Their muscles reflexively swing the bat at the right time and location in line with their internal models' predictions . They can quickly act on their predictions of the future without the need to consciously roll out possible future scenarios to form a plan .

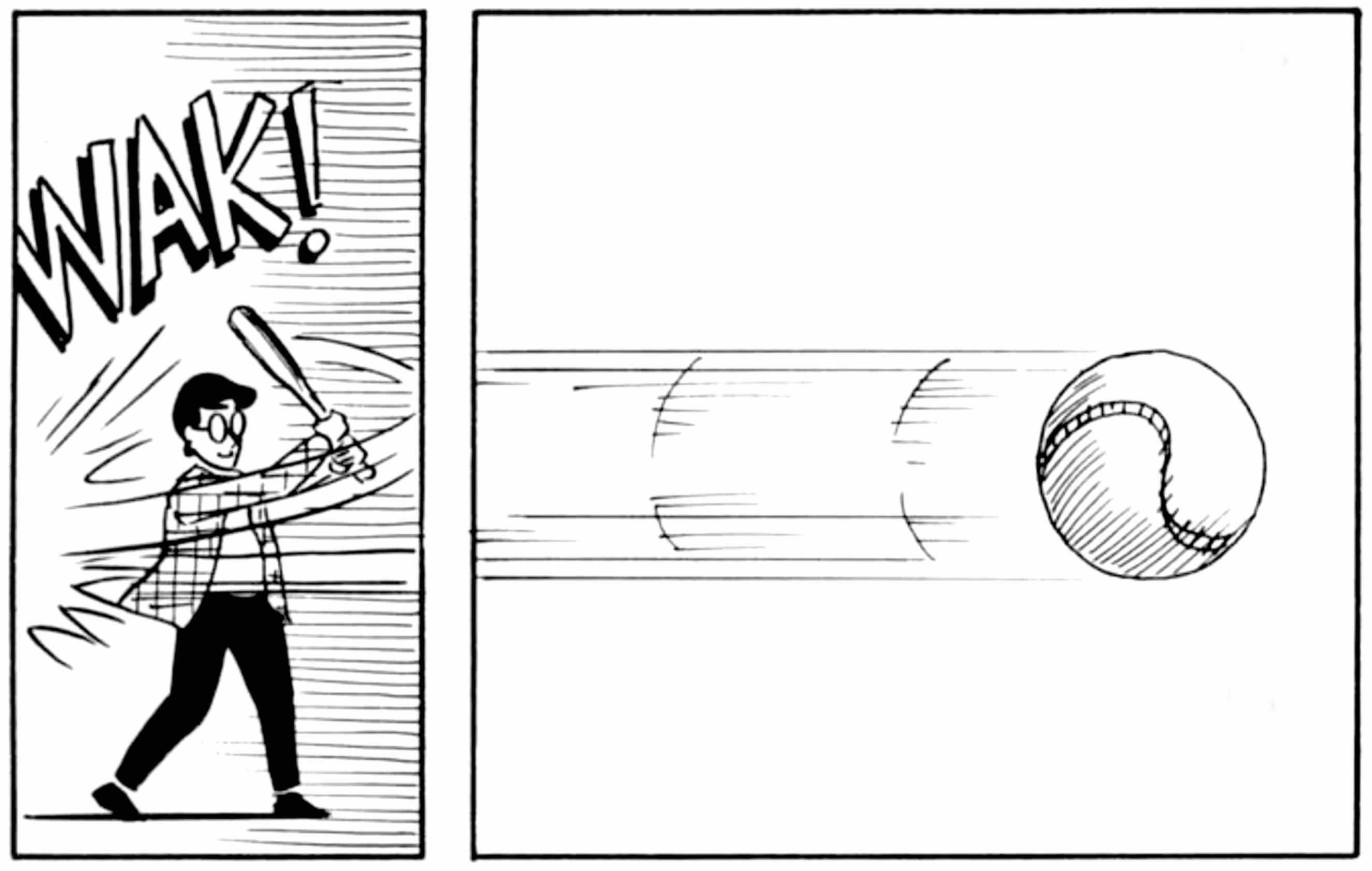

We learn to perceive time spatially when we read comics. According to cartoonist and comics theorist Scott McCloud, “in the world of comics, time and space are one and the same.” Art © Scott McCloud.

We learn to perceive time spatially when we read comics. According to cartoonist and comics theorist Scott McCloud, “in the world of comics, time and space are one and the same.” Art © Scott McCloud.

In many reinforcement learning (RL) problems , an artificial agent also benefits from having a good representation of past and present states, and a good predictive model of the future , preferably a powerful predictive model implemented on a general purpose computer such as a recurrent neural network (RNN) .

Large RNNs are highly expressive models that can learn rich spatial and temporal representations of data. However, many model-free RL methods in the literature often only use small neural networks with few parameters. The RL algorithm is often bottlenecked by the credit assignment problemIn many RL problems, the feedback (positive or negative reward) is given at end of a sequence of steps. The credit assignment problem tackles the problem of figuring out which steps caused the resulting feedback--which steps should receive credit or blame for the final result?, which makes it hard for traditional RL algorithms to learn millions of weights of a large model, hence in practice, smaller networks are used as they iterate faster to a good policy during training.

Ideally, we would like to be able to efficiently train large RNN-based agents. The backpropagation algorithm can be used to train large neural networks efficiently. In this work we look at training a large neural networkTypical model-free RL models have in the order of 103 to 106 model parameters. We look at training models in the order of 107 parameters, which is still rather small compared to state-of-the-art deep learning models with 108 to even 109 parameters. In principle, the procedure described in this article can take advantage of these larger networks if we wanted to use them. to tackle RL tasks, by dividing the agent into a large world model and a small controller model. We first train a large neural network to learn a model of the agent's world in an unsupervised manner, and then train the smaller controller model to learn to perform a task using this world model. A small controller lets the training algorithm focus on the credit assignment problem on a small search space, while not sacrificing capacity and expressiveness via the larger world model. By training the agent through the lens of its world model, we show that it can learn a highly compact policy to perform its task.

In this article, we combine several key concepts from a series of papers from 1990--2015 on RNN-based world models and controllers with more recent tools from probabilistic modelling, and present a simplified approach to test some of those key concepts in modern RL environments . Experiments show that our approach can be used to solve a challenging race car navigation from pixels task that previously has not been solved using more traditional methods.

Most existing model-based RL approaches learn a model of the RL environment, but still train on the actual environment. Here, we also explore fully replacing an actual RL environment with a generated one, training our agent's controller only inside of the environment generated by its own internal world model, and transfer this policy back into the actual environment.

To overcome the problem of an agent exploiting imperfections of the generated environments, we adjust a temperature parameter of internal world model to control the amount of uncertainty of the generated environments. We train an agent's controller inside of a noisier and more uncertain version of its generated environment, and demonstrate that this approach helps prevent our agent from taking advantage of the imperfections of its internal world model. We will also discuss other related works in the model-based RL literature that share similar ideas of learning a dynamics model and training an agent using this model.

Agent Model

We present a simple model inspired by our own cognitive system. In this model, our agent has a visual sensory component that compresses what it sees into a small representative code. It also has a memory component that makes predictions about future codes based on historical information. Finally, our agent has a decision-making component that decides what actions to take based only on the representations created by its vision and memory components.

Our agent consists of three components that work closely together: Vision (V), Memory (M), and Controller (C).

VAE (V) Model

The environment provides our agent with a high dimensional input observation at each time step. This input is usually a 2D image frame that is part of a video sequence. The role of the V model is to learn an abstract, compressed representation of each observed input frame.

Flow diagram of a Variational Autoencoder.

Flow diagram of a Variational Autoencoder.

We use a Variational Autoencoder (VAE) as the V model in our experiments. In the following demo, we show how the V model compresses each frame it receives at time step t into a low dimensional latent vector zt. This compressed representation can be used to reconstruct the original image.

Interactive Demo

A VAE trained on screenshots obtained from a VizDoom environment. You can load randomly chosen screenshots to be encoded into a small latent vector z, which is used to reconstruct the original screenshot. You can also experiment with adjusting the values of the z vector using the slider bars to see how it affects the reconstruction, or randomize z to observe the space of possible screenshots learned by our VAE.

MDN-RNN (M) Model

While it is the role of the V model to compress what the agent sees at each time frame, we also want to compress what happens over time. For this purpose, the role of the M model is to predict the future. The M model serves as a predictive model of the future z vectors that V is expected to produce. Because many complex environments are stochastic in nature, we train our RNN to output a probability density function p(z) instead of a deterministic prediction of z.

RNN with a Mixture Density Network output layer. The MDN outputs the parameters of a mixture of Gaussian distribution used to sample a prediction of the next latent vector z.

RNN with a Mixture Density Network output layer. The MDN outputs the parameters of a mixture of Gaussian distribution used to sample a prediction of the next latent vector z.

In our approach, we approximate p(z) as a mixture of Gaussian distribution, and train the RNN to output the probability distribution of the next latent vector zt+1 given the current and past information made available to it.

More specifically, the RNN will model P(zt+1∣at,zt,ht), where at is the action taken at time t and ht is the hidden state of the RNN at time t. During sampling, we can adjust a temperature parameter τ to control model uncertainty, as done in -- we will find adjusting τ to be useful for training our controller later on.

SketchRNN is an example of a MDN-RNN used to predict the next pen strokes of a sketch drawing. We use a similar model to predict the next latent vector z.

This approach is known as a Mixture Density Network combined with a RNN (MDN-RNN) , and has been used successfully in the past for sequence generation problems such as generating handwriting and sketches .

Controller (C) Model

The Controller (C) model is responsible for determining the course of actions to take in order to maximize the expected cumulative reward of the agent during a rollout of the environment. In our experiments, we deliberately make C as simple and small as possible, and trained separately from V and M, so that most of our agent's complexity resides in the world model (V and M).

C is a simple single layer linear model that maps zt and ht directly to action at at each time step:

at=Wc[ztht]+bc

In this linear model, Wc and bc are the weight matrix and bias vector that maps the concatenated input vector [ztht] to the output action vector at.To be clear, the prediction of zt+1 is not fed into the controller C directly -- just the hidden state ht and zt. This is because ht has all the information needed to generate the parameters of a mixture of Gaussian distribution, if we want to sample zt+1 to make a prediction.

Putting Everything Together

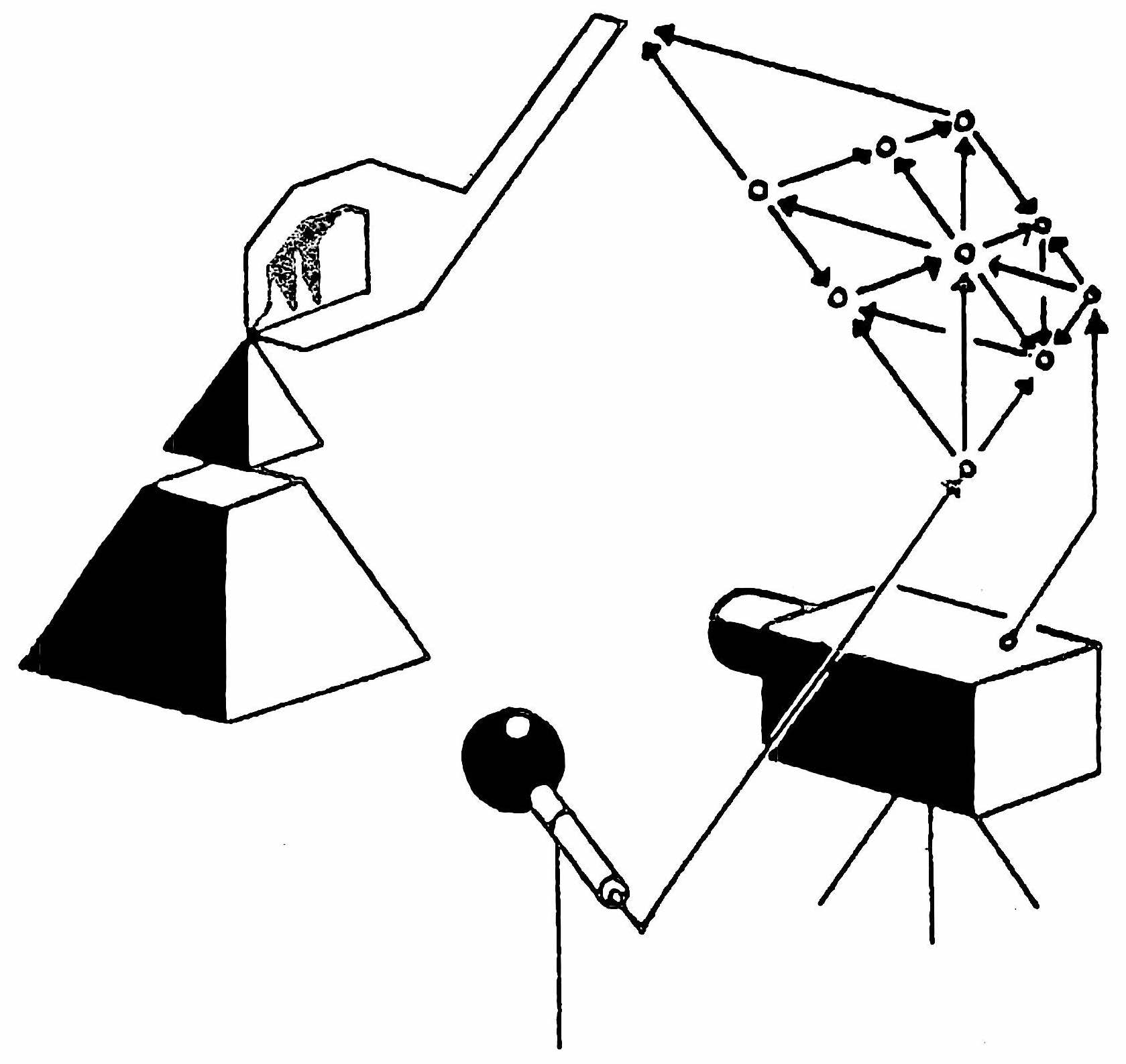

The following flow diagram illustrates how V, M, and C interacts with the environment:

Flow diagram of our Agent model. The raw observation is first processed by V at each time step t to produce zt. The input into C is this latent vector zt concatenated with M's hidden state ht at each time step. C will then output an action vector at for motor control. M will then take the current zt and action at as an input to update its own hidden state to produce ht+1 to be used at time t+1.

Flow diagram of our Agent model. The raw observation is first processed by V at each time step t to produce zt. The input into C is this latent vector zt concatenated with M's hidden state ht at each time step. C will then output an action vector at for motor control. M will then take the current zt and action at as an input to update its own hidden state to produce ht+1 to be used at time t+1.

Below is the pseudocode for how our agent model is used in the OpenAI Gym environment. Running this function on a given controller C will return the cumulative reward during a rollout of the environment.

def rollout(controller):

''' env, rnn, vae are '''

''' global variables '''

obs = env.reset()

h = rnn.initial_state()

done = False

cumulative_reward = 0

while not done:

z = vae.encode(obs)

a = controller.action([z, h])

obs, reward, done = env.step(a)

cumulative_reward += reward

h = rnn.forward([a, z, h])

return cumulative_reward

This minimal design for C also offers important practical benefits. Advances in deep learning provided us with the tools to train large, sophisticated models efficiently, provided we can define a well-behaved, differentiable loss function. Our V and M models are designed to be trained efficiently with the backpropagation algorithm using modern GPU accelerators, so we would like most of the model's complexity, and model parameters to reside in V and M. The number of parameters of C, a linear model, is minimal in comparison. This choice allows us to explore more unconventional ways to train C -- for example, even using evolution strategies (ES) to tackle more challenging RL tasks where the credit assignment problem is difficult.

To optimize the parameters of C, we chose the Covariance-Matrix Adaptation Evolution Strategy (CMA-ES) as our optimization algorithm since it is known to work well for solution spaces of up to a few thousand parameters. We evolve parameters of C on a single machine with multiple CPU cores running multiple rollouts of the environment in parallel.

For more specific information about the models, training procedures, and environments used in our experiments, please refer to the Appendix.

Car Racing Experiment: World Model for Feature Extraction

A predictive world model can help us extract useful representations of space and time. By using these features as inputs of a controller, we can train a compact and minimal controller to perform a continuous control task, such as learning to drive from pixel inputs for a top-down car racing environment . In this section, we describe how we can train the Agent model described earlier to solve a car racing task. To our knowledge, our agent is the first known solution to achieve the score required to solve this task.We find this task interesting because although it is not difficult to train an agent to wobble around randomly generated tracks and obtain a mediocre score, CarRacing-v0 defines "solving" as getting average reward of 900 over 100 consecutive trials, which means the agent can only afford very few driving mistakes.

Our agent learning to navigate a top-down racing environment.

In this environment, the tracks are randomly generated for each trial, and our agent is rewarded for visiting as many tiles as possible in the least amount of time. The agent controls three continuous actions: steering left/right, acceleration, and brake.

To train our V model, we first collect a dataset of 10,000 random rollouts of the environment. We have first an agent acting randomly to explore the environment multiple times, and record the random actions at taken and the resulting observations from the environment.We will discuss an iterative training procedure later on for more complicated environments where a random policy is not sufficient. We use this dataset to train V to learn a latent space of each frame observed. We train our VAE to encode each frame into low dimensional latent vector z by minimizing the difference between a given frame and the reconstructed version of the frame produced by the decoder from z. The following demo shows the results of our VAE after training:

Interactive Demo

Our VAE trained on observations from CarRacing-v0 . Despite losing details during this lossy compression process, latent vector z captures the essence of each 64x64px image frame.

We can now use our trained V model to pre-process each frame at time t into zt to train our M model. Using this pre-processed data, along with the recorded random actions at taken, our MDN-RNN can now be trained to model P(zt+1∣at,zt,ht) as a mixture of Gaussians.Although in principle, we can train V and M together in an end-to-end manner, we found that training each separately is more practical, achieves satisfactory results, and does not require exhaustive hyperparameter tuning. As images are not required to train M on its own, we can even train on large batches of long sequences of latent vectors encoding the entire 1000 frames of an episode to capture longer term dependencies, on a single GPU.

In this experiment, the world model (V and M) has no knowledge about the actual reward signals from the environment. Its task is simply to compress and predict the sequence of image frames observed. Only the Controller (C) Model has access to the reward information from the environment. Since there are a mere 867 parameters inside the linear controller model, evolutionary algorithms such as CMA-ES are well suited for this optimization task.

The figure below compares actual the observation given to the agent and the observation captured by the world model. We can use the VAE to reconstruct each frame using zt at each time step to visualize the quality of the information the agent actually sees during a rollout:

| Actual observations from the environment. |

What gets encoded into zt. |

Procedure

To summarize the Car Racing experiment, below are the steps taken:

- Collect 10,000 rollouts from a random policy.

- Train VAE (V) to encode each frame into a latent vector z∈R32.

- Train MDN-RNN (M) to model P(zt+1∣at,zt,ht).

- Evolve Controller (C) to maximize the expected cumulative reward of a rollout.

| Model |

Parameter Count |

| VAE |

4,348,547 |

| MDN-RNN |

422,368 |

| Controller |

867 |

Car Racing Experiment Results

V Model Only

Training an agent to drive is not a difficult task if we have a good representation of the observation. Previous works have shown that with a good set of hand-engineered information about the observation, such as LIDAR information, angles, positions and velocities, one can easily train a small feed-forward network to take this hand-engineered input and output a satisfactory navigation policy. For this reason, we first want to test our agent by handicapping C to only have access to V but not M, so we define our controller as at=Wczt+bc.

Limiting our controller to see only zt, but not ht results in wobbly and unstable driving behaviours.

Although the agent is still able to navigate the race track in this setting, we notice it wobbles around and misses the tracks on sharper corners. This handicapped agent achieved an average score of 632 ± 251 over 100 random trials, in line with the performance of other agents on OpenAI Gym's leaderboard and traditional Deep RL methods such as A3C . Adding a hidden layer to C's policy network helps to improve the results to 788 ± 141, but not quite enough to solve this environment.

Full World Model (V and M)

The representation zt provided by our V model only captures a representation at a moment in time and doesn't have much predictive power. In contrast, M is trained to do one thing, and to do it really well, which is to predict zt+1. Since M's prediction of zt+1 is produced from the RNN's hidden state ht at time t, this vector is a good candidate for the set of learned features we can give to our agent. Combining zt with ht gives our controller C a good representation of both the current observation, and what to expect in the future.

Driving is more stable if we give our controller access to both zt and ht.

Indeed, we see that allowing the agent to access the both zt and ht greatly improves its driving capability. The driving is more stable, and the agent is able to seemingly attack the sharp corners effectively. Furthermore, we see that in making these fast reflexive driving decisions during a car race, the agent does not need to plan ahead and roll out hypothetical scenarios of the future. Since ht contain information about the probability distribution of the future, the agent can just query the RNN instinctively to guide its action decisions. Like a seasoned Formula One driver or the baseball player discussed earlier, the agent can instinctively predict when and where to navigate in the heat of the moment.

| Method |

Average Score over 100 Random Tracks |

| DQN |

|

| A3C (continuous) |

|

| A3C (discrete) |

|

| ceobillionaire's algorithm (unpublished) |

|

| V model only, z input |

|

| V model only, z input with a hidden layer |

|

| Full World Model, z and h |

|

Our agent was able to achieve a score of 906 ± 21 over 100 random trials, effectively solving the task and obtaining new state of the art results. Previous attempts using traditional Deep RL methods obtained average scores of 591--652 range, and the best reported solution on the leaderboard obtained an average score of 838 ± 11 over 100 random consecutive trials. Traditional Deep RL methods often require pre-processing of each frame, such as employing edge-detection , in addition to stacking a few recent frames into the input. In contrast, our world model takes in a stream of raw RGB pixel images and directly learns a spatial-temporal representation. To our knowledge, our method is the first reported solution to solve this task.

Car Racing Dreams

Since our world model is able to model the future, we are also able to have it come up with hypothetical car racing scenarios on its own. We can ask it to produce the probability distribution of zt+1 given the current states, sample a zt+1 and use this sample as the real observation. We can put our trained C back into this dream environment generated by M. The following demo shows how our world model can be used to generate the car racing environment:

Interactive Demo

Our agent driving inside of its own dream world. Here, we deploy our trained policy into a fake environment generated by the MDN-RNN, and rendered using the VAE's decoder. You can override the agent's actions by tapping on the left or right side of the screen, or by hitting arrow keys (left/right to steer, up/down to accelerate or brake). The uncertainty level of the environment can be adjusted by changing τ using the slider on the bottom right.

We have just seen that a policy learned inside of the real environment appears to somewhat function inside of the dream environment. This begs the question -- can we train our agent to learn inside of its own dream, and transfer this policy back to the actual environment?

VizDoom Experiment: Learning Inside of a Dream

If our world model is sufficiently accurate for its purpose, and complete enough for the problem at hand, we should be able to substitute the actual environment with this world model. After all, our agent does not directly observe the reality, but only sees what the world model lets it see. In this experiment, we train an agent inside the dream environment generated by its world model trained to mimic a VizDoom environment.

Our final agent solving the VizDoom: Take Cover environment.

The agent must learn to avoid fireballs shot by monsters from the other side of the room with the sole intent of killing the agent. There are no explicit rewards in this environment, so to mimic natural selection, the cumulative reward can be defined to be the number of time steps the agent manages to stay alive during a rollout. Each rollout in the environment runs for a maximum of 2100 time steps (∼ 60 seconds), and the task is considered solved if the average survival time over 100 consecutive rollouts is greater than 750 time steps (∼ 20 seconds) .

Procedure

The setup of our VizDoom experiment is largely the same as the Car Racing task, except for a few key differences. In the Car Racing task, M is only trained to model the next zt. Since we want to build a world model we can train our agent in, our M model here will also predict whether the agent dies in the next frame (as a binary event donet, or dt for short), in addition to the next frame zt.

Since the M model can predict the done state in addition to the next observation, we now have all of the ingredients needed to make a full RL environment. We first build an OpenAI Gym environment interface by wrapping a gym.Env interface over our M if it were a real Gym environment, and then train our agent inside of this virtual environment instead of using the actual environment.

In this simulation, we don't need the V model to encode any real pixel frames during the hallucination process, so our agent will therefore only train entirely in a latent space environment. This has many advantages that will be discussed later on.

This virtual environment has an identical interface to the real environment, so after the agent learns a satisfactory policy in the virtual environment, we can easily deploy this policy back into the actual environment to see how well the policy transfers over.

To summarize the Take Cover experiment, below are the steps taken:

- Collect 10,000 rollouts from a random policy.

- Train VAE (V) to encode each frame into a latent vector z∈R64, and use V to convert the images collected from (1) into the latent space representation.

- Train MDN-RNN (M) to model P(zt+1,dt+1∣at,zt,ht).

- Evolve Controller (C) to maximize the expected survival time inside the virtual environment.

- Use learned policy from (4) on actual Gym environment.

| Model |

Parameter Count |

| VAE |

4,446,915 |

| MDN-RNN |

1,678,785 |

| Controller |

1,088 |

Training Inside of the Dream

After some training, our controller learns to navigate around the dream environment and escape from deadly fireballs launched by monsters generated by the M model. Our agent achieved a score in this virtual environment of ∼ 900 time steps.

The following demo shows how our agent navigates inside its own dream. The M model learns to generate monsters that shoot fireballs at the direction of the agent, while the C model discovers a policy to avoid these generated fireballs. Here, the V model is only used to decode the latent vectors zt produced by M into a sequence of pixel images we can see:

Interactive Demo

Our agent discovers a policy to avoid generated fireballs. In this demo, you can override the agent's action by using the left/right keys on your keyboard, or by tapping on either side of the screen. You can also control the uncertainty level of the environment by adjusting the temperature parameter using slider on the bottom right.

Here, our RNN-based world model is trained to mimic a complete game environment designed by human programmers. By learning only from raw image data collected from random episodes, it learns how to simulate the essential aspects of the game -- such as the game logic, enemy behaviour, physics, and also the 3D graphics rendering.

For instance, if the agent selects the left action, the M model learns to move the agent to the left and adjust its internal representation of the game states accordingly. It also learns to block the agent from moving beyond the walls on both sides of the level if the agent attempts to move too far in either direction. Occasionally, the M model needs to keep track of multiple fireballs being shot from several different monsters and coherently move them along in their intended directions. It must also detect whether the agent has been killed by one of these fireballs.

Unlike the actual game environment, however, we note that it is possible to add extra uncertainty into the virtual environment, thus making the game more challenging in the dream environment. We can do this by increasing the temperature τ parameter during the sampling process of zt+1, as done in . By increasing the uncertainty, our dream environment becomes more difficult compared to the actual environment. The fireballs may move more randomly in a less predictable path compared to the actual game. Sometimes the agent may even die due to sheer misfortune, without explanation.

We find agents that perform well in higher temperature settings generally perform better in the normal setting. In fact, increasing τ helps prevent our controller from taking advantage of the imperfections of our world model -- we will discuss this in more depth later on.

Transfer Policy to Actual Environment

Deploying our policy learned inside of the dream RNN environment back into the actual VizDoom environment.

We took the agent trained inside of the virtual environment and tested its performance on the original VizDoom scenario. The score over 100 random consecutive trials is ∼ 1100 time steps, far beyond the required score of 750 time steps, and also much higher than the score obtained inside the more difficult virtual environment.We will discuss how this score compares to other models later on.

| Cropped 64x64px frame of environment. |

Reconstruction from latent vector. |

We see that even though the V model is not able to capture all of the details of each frame correctly, for instance, getting the number of monsters correct, the agent is still able to use the learned policy to navigate in the real environment. As the virtual environment cannot even keep track of the exact number of monsters in the first place, an agent that is able to survive the noisier and uncertain virtual nightmare environment will thrive in the original, cleaner environment.

Cheating the World Model

In our childhood, we may have encountered ways to exploit video games in ways that were not intended by the original game designer . Players discover ways to collect unlimited lives or health, and by taking advantage of these exploits, they can easily complete an otherwise difficult game. However, in the process of doing so, they may have forfeited the opportunity to learn the skill required to master the game as intended by the game designer. In our initial experiments, we noticed that our agent discovered an adversarial policy to move around in such a way so that the monsters in this virtual environment governed by M never shoots a single fireball during some rollouts. Even when there are signs of a fireball forming, the agent moves in a way to extinguish the fireballs.

Agent discovers an adversarial policy that fools the monsters inside the world model into never launching any fireballs during some rollouts.

Because M is only an approximate probabilistic model of the environment, it will occasionally generate trajectories that do not follow the laws governing the actual environment. As we previously pointed out, even the number of monsters on the other side of the room in the actual environment is not exactly reproduced by M. For this reason, our world model will be exploitable by C, even if such exploits do not exist in the actual environment.

As a result of using M to generate a virtual environment for our agent, we are also giving the controller access to all of the hidden states of M. This is essentially granting our agent access to all of the internal states and memory of the game engine, rather than only the game observations that the player gets to see. Therefore our agent can efficiently explore ways to directly manipulate the hidden states of the game engine in its quest to maximize its expected cumulative reward. The weakness of this approach of learning a policy inside of a learned dynamics model is that our agent can easily find an adversarial policy that can fool our dynamics model -- it will find a policy that looks good under our dynamics model, but will fail in the actual environment, usually because it visits states where the model is wrong because they are away from the training distribution.

This weakness could be the reason that many previous works that learn dynamics models of RL environments do not actually use those models to fully replace the actual environments . Like in the M model proposed in , the dynamics model is deterministic, making it easily exploitable by the agent if it is not perfect. Using Bayesian models, as in PILCO , helps to address this issue with the uncertainty estimates to some extent, however, they do not fully solve the problem. Recent work combines the model-based approach with traditional model-free RL training by first initializing the policy network with the learned policy, but must subsequently rely on model-free methods to fine-tune this policy in the actual environment.

To make it more difficult for our C to exploit deficiencies of M, we chose to use the MDN-RNN as the dynamics model of the distribution of possible outcomes in the actual environment, rather than merely predicting a deterministic future. Even if the actual environment is deterministic, the MDN-RNN would in effect approximate it as a stochastic environment. This has the advantage of allowing us to train C inside a more stochastic version of any environment -- we can simply adjust the temperature parameter τ to control the amount of randomness in M, hence controlling the tradeoff between realism and exploitability.

Using a mixture of Gaussian model may seem excessive given that the latent space encoded with the VAE model is just a single diagonal Gaussian distribution. However, the discrete modes in a mixture density model are useful for environments with random discrete events, such as whether a monster decides to shoot a fireball or stay put. While a single diagonal Gaussian might be sufficient to encode individual frames, an RNN with a mixture density output layer makes it easier to model the logic behind a more complicated environment with discrete random states.

For instance, if we set the temperature parameter to a very low value of τ=0.1, effectively training our C with an M that is almost identical to a deterministic LSTM, the monsters inside this generated environment fail to shoot fireballs, no matter what the agent does, due to mode collapse. M is not able to transition to another mode in the mixture of Gaussian model where fireballs are formed and shot. Whatever policy learned inside of this generated environment will achieve a perfect score of 2100 most of the time, but will obviously fail when unleashed into the harsh reality of the actual world, underperforming even a random policy.

In the following demo, we show that even low values of τ∼0.5 make it difficult for the MDN-RNN to generate fireballs:

Interactive Demo

For low τ settings, monsters in the M model rarely shoot fireballs. Even when you try to increase τ to 1.0 using the slider bar, the agent will occasionally extinguish fireballs still being formed, by fooling M.

By making the temperature τ an adjustable parameter of M, we can see the effect of training C inside of virtual environments with different levels of uncertainty, and see how well they transfer over to the actual environment. We experiment with varying τ of the virtual environment, training an agent inside of this virtual environment, and observing its performance when inside the actual environment.

|

Score in Virtual Environment |

Score in Actual Environment |

0.10 |

|

|

0.50 |

|

|

1.00 |

|

|

1.15 |

|

|

1.30 |

|

|

Random Policy Baseline |

N/A |

|

Gym Leaderboard |

N/A |

|

In the table above, while we see that increasing τ of M makes it more difficult for C to find adversarial policies, increasing it too much will make the virtual environment too difficult for the agent to learn anything, hence in practice it is a hyperparameter we can tune. The temperature also affects the types of strategies the agent discovers. For example, although the best score obtained is 1092 ± 556 with τ=1.15, increasing τ a notch to 1.30 results in a lower score but at the same time a less risky strategy with a lower variance of returns. For comparison, the best reported score is 820 ± 58.

Iterative Training Procedure

In our experiments, the tasks are relatively simple, so a reasonable world model can be trained using a dataset collected from a random policy. But what if our environments become more sophisticated? In any difficult environment, only parts of the world are made available to the agent only after it learns how to strategically navigate through its world.

For more complicated tasks, an iterative training procedure is required. We need our agent to be able to explore its world, and constantly collect new observations so that its world model can be improved and refined over time. An iterative training procedure, adapted from Learning To Think is as follows:

- Initialize M, C with random model parameters.

- Rollout to actual environment N times. Agent may learn during rollouts. Save all actions at and observations xt during rollouts to storage device.

- Train M to model P(xt+1,rt+1,at+1,dt+1∣xt,at,ht) and train C to optimize expected rewards inside of M.

- Go back to (2) if task has not been completed.

We have shown that one iteration of this training loop was enough to solve simple tasks. For more difficult tasks, we need our controller in Step 2 to actively explore parts of the environment that is beneficial to improve its world model. An exciting research direction is to look at ways to incorporate artificial curiosity and intrinsic motivation and information seeking abilities in an agent to encourage novel exploration . In particular, we can augment the reward function based on improvement in compression quality .

Swing-up Pendulum from Pixels: Generated rollout after the first iteration. M has difficulty predicting states of a swung up pole since the data collected from the initial random policy is near the steady state in the bottom half. Despite this, C still learns to swing the pole upwards when deployed inside of M.

Swing-up Pendulum from Pixels: Generated rollout after 20 iterations. Deploying policies that swing the pole upwards in the actual environment gathered more data that recorded the pole being in the top half, allowing M to model the environment more accurately, and C to learn a better policy inside of M.

In the present approach, since M is a MDN-RNN that models a probability distribution for the next frame, if it does a poor job, then it means the agent has encountered parts of the world that it is not familiar with. Therefore we can adapt and reuse M's training loss function to encourage curiosity. By flipping the sign of M's loss function in the actual environment, the agent will be encouraged to explore parts of the world that it is not familiar with. The new data it collects may improve the world model.

The iterative training procedure requires the M model to not only predict the next observation x and done, but also predict the action and reward for the next time step. This may be required for more difficult tasks. For instance, if our agent needs to learn complex motor skills to walk around its environment, the world model will learn to imitate its own C model that has already learned to walk. After difficult motor skills, such as walking, is absorbed into a large world model with lots of capacity, the smaller C model can rely on the motor skills already absorbed by the world model and focus on learning more higher level skills to navigate itself using the motor skills it had already learned.Another related connection is to muscle memory. For instance, as you learn to do something like play the piano, you no longer have to spend working memory capacity on translating individual notes to finger motions -- this all becomes encoded at a subconscious level.

How information becomes memory.

How information becomes memory.

An interesting connection to the neuroscience literature is the work on hippocampal replay that examines how the brain replays recent experiences when an animal rests or sleeps. Replaying recent experiences plays an important role in memory consolidation -- where hippocampus-dependent memories become independent of the hippocampus over a period of time . As Foster puts it, replay is "less like dreaming and more like thought". We invite readers to read Replay Comes of Age for a detailed overview of replay from a neuroscience perspective with connections to theoretical reinforcement learning.

Iterative training could allow the C--M model to develop a natural hierarchical way to learn. Recent works about self-play in RL and PowerPlay also explores methods that lead to a natural curriculum learning , and we feel this is one of the more exciting research areas of reinforcement learning.

Related Work

There is extensive literature on learning a dynamics model, and using this model to train a policy. Many concepts first explored in the 1980s for feed-forward neural networks (FNNs) and in the 1990s for RNNs laid some of the groundwork for Learning to Think . The more recent PILCO is a probabilistic model-based search policy method designed to solve difficult control problems. Using data collected from the environment, PILCO uses a Gaussian process (GP) model to learn the system dynamics, and then uses this model to sample many trajectories in order to train a controller to perform a desired task, such as swinging up a pendulum, or riding a unicycle.

While Gaussian processes work well with a small set of low dimensional data, their computational complexity makes them difficult to scale up to model a large history of high dimensional observations. Other recent works use Bayesian neural networks instead of GPs to learn a dynamics model. These methods have demonstrated promising results on challenging control tasks , where the states are known and well defined, and the observation is relatively low dimensional. Here we are interested in modelling dynamics observed from high dimensional visual data where our input is a sequence of raw pixel frames.

In robotic control applications, the ability to learn the dynamics of a system from observing only camera-based video inputs is a challenging but important problem. Early work on RL for active vision trained an FNN to take the current image frame of a video sequence to predict the next frame , and use this predictive model to train a fovea-shifting control network trying to find targets in a visual scene. To get around the difficulty of training a dynamical model to learn directly from high-dimensional pixel images, researchers explored using neural networks to first learn a compressed representation of the video frames. Recent work along these lines was able to train controllers using the bottleneck hidden layer of an autoencoder as low-dimensional feature vectors to control a pendulum from pixel inputs. Learning a model of the dynamics from a compressed latent space enable RL algorithms to be much more data-efficient . We invite readers to watch Finn's lecture on Model-Based RL to learn more.

Video game environments are also popular in model-based RL research as a testbed for new ideas. Guzdial et al. used a feed-forward convolutional neural network (CNN) to learn a forward simulation model of a video game. Learning to predict how different actions affect future states in the environment is useful for game-play agents, since if our agent can predict what happens in the future given its current state and action, it can simply select the best action that suits its goal. This has been demonstrated not only in early work (when compute was a million times more expensive than today) but also in recent studies on several competitive VizDoom environments.

The works mentioned above use FNNs to predict the next video frame. We may want to use models that can capture longer term time dependencies. RNNs are powerful models suitable for sequence modelling . In a lecture called Hallucination with RNNs , Graves demonstrated the ability of RNNs to learn a probabilistic model of Atari game environments. He trained RNNs to learn the structure of such a game and then showed that they can hallucinate similar game levels on its own.

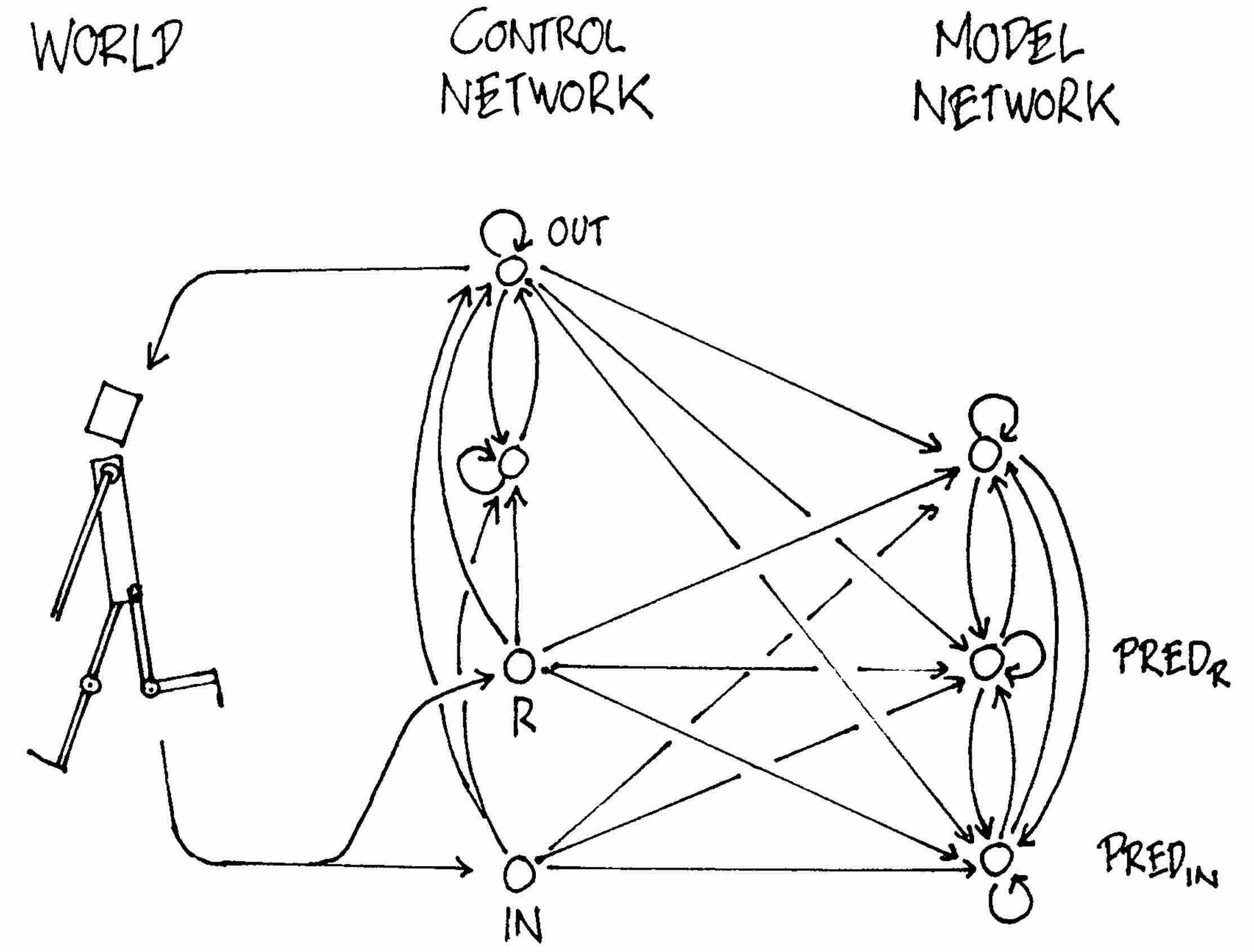

A controller with internal RNN model of the world.

A controller with internal RNN model of the world.

Using RNNs to develop internal models to reason about the future has been explored as early as 1990 in a paper called Making the World Differentiable , and then further explored in . A more recent paper called Learning to Think presented a unifying framework for building a RNN-based general problem solver that can learn a world model of its environment and also learn to reason about the future using this model. Subsequent works have used RNN-based models to generate many frames into the future , and also as an internal model to reason about the future .

In this work, we used evolution strategies (ES) to train our controller, as it offers many benefits. For instance, we only need to provide the optimizer with the final cumulative reward, rather than the entire history. ES is also easy to parallelize -- we can launch many instances of rollout with different solutions to many workers and quickly compute a set of cumulative rewards in parallel. Recent works have confirmed that ES is a viable alternative to traditional Deep RL methods on many strong baseline tasks.

Before the popularity of Deep RL methods , evolution-based algorithms have been shown to be effective at finding solutions for RL tasks . Evolution-based algorithms have even been able to solve difficult RL tasks from high dimensional pixel inputs . More recent works also combine VAE and ES, which is similar to our approach.

Discussion

We have demonstrated the possibility of training an agent to perform tasks entirely inside of its simulated latent space world. This approach offers many practical benefits. For instance, video game engines typically require heavy compute resources for rendering the game states into image frames, or calculating physics not immediately relevant to the game. We may not want to waste cycles training an agent in the actual environment, but instead train the agent as many times as we want inside its simulated environment. Agents that are trained incrementally to simulate reality may prove to be useful for transferring policies back to the real world. Our approach may complement sim2real approaches outlined in previous work .

Furthermore, we can take advantage of deep learning frameworks to accelerate our world model simulations using GPUs in a distributed environment. The benefit of implementing the world model as a fully differentiable recurrent computation graph also means that we may be able to train our agents in the dream directly using the backpropagation algorithm to fine-tune its policy to maximize an objective function .

The choice of implementing V as a VAE and training it as a standalone model also has its limitations, since it may encode parts of the observations that are not relevant to a task. After all, unsupervised learning cannot, by definition, know what will be useful for the task at hand. For instance, our VAE reproduced unimportant detailed brick tile patterns on the side walls in the Doom environment, but failed to reproduce task-relevant tiles on the road in the Car Racing environment. By training together with an M that predicts rewards, the VAE may learn to focus on task-relevant areas of the image, but the tradeoff here is that we may not be able to reuse the VAE effectively for new tasks without retraining. Learning task-relevant features has connections to neuroscience as well. Primary sensory neurons are released from inhibition when rewards are received, which suggests that they generally learn task-relevant features, rather than just any features, at least in adulthood .

Another concern is the limited capacity of our world model. While modern storage devices can store large amounts of historical data generated using an iterative training procedure, our LSTM-based world model may not be able to store all of the recorded information inside of its weight connections. While the human brain can hold decades and even centuries of memories to some resolution , our neural networks trained with backpropagation have more limited capacity and suffer from issues such as catastrophic forgetting . Future work will explore replacing the VAE and MDN-RNN with higher capacity models , or incorporating an external memory module , if we want our agent to learn to explore more complicated worlds.

Ancient drawing (1990) of a RNN-based controller interacting with an environment.

Ancient drawing (1990) of a RNN-based controller interacting with an environment.

Like early RNN-based C--M systems , ours simulates possible futures time step by time step, without profiting from human-like hierarchical planning or abstract reasoning, which often ignores irrelevant spatial-temporal details. However, the more general Learning To Think approach is not limited to this rather naive approach. Instead it allows a recurrent C to learn to address "subroutines" of the recurrent M, and reuse them for problem solving in arbitrary computable ways, e.g., through hierarchical planning or other kinds of exploiting parts of M's program-like weight matrix. A recent One Big Net extension of the C--M approach

collapses C and M into a single network, and uses PowerPlay-like behavioural replay (where the behaviour of a teacher net is compressed into a student net ) to avoid forgetting old prediction and control skills when learning new ones. Experiments with those more general approaches are left for future work.

If you would like to discuss any issues or give feedback, please visit the GitHub repository of this page for more information.

Acknowledgments

We would like to thank Blake Richards, Kory Mathewson, Kyle McDonald, Kai Arulkumaran, Ankur Handa, Denny Britz, Elwin Ha and Natasha Jaques for their thoughtful feedback on this article, and for offering their valuable perspectives and insights from their areas of expertise.

The interative demos in this article were all built using p5.js. Deploying all of these machine learning models in a web browser was made possible with deeplearn.js, a hardware-accelerated machine learning framework for the browser, developed by the People+AI Research Initiative (PAIR) team at Google. A special thanks goes to Nikhil Thorat and Daniel Smilkov for their support.

We would like to thank Chris Olah and the rest of the Distill editorial team for their valuable feedback and generous editorial support, in addition to supporting the use of their distill.pub technology.

We would to extend our thanks to Alex Graves, Douglas Eck, Mike Schuster, Rajat Monga, Vincent Vanhoucke, Jeff Dean and the Google Brain team for helpful feedback and for encouraging us to explore this area of research.

Any errors here are our own and do not reflect opinions of our proofreaders and colleagues. If you see mistakes or want to suggest changes, feel free to contribute feedback by participating in the discussion forum for this article.

The experiments in this article were performed on both a P100 GPU and a 64-core CPU Ubuntu Linux virtual machine provided by Google Cloud Platform, using TensorFlow and OpenAI Gym.

Citation

For attribution in academic contexts, please cite this work as

Ha and Schmidhuber, "Recurrent World Models Facilitate Policy Evolution", 2018.

BibTeX citation

@incollection{ha2018worldmodels,

title = {Recurrent World Models Facilitate Policy Evolution},

author = {Ha, David and Schmidhuber, J{\"u}rgen},

booktitle = {Advances in Neural Information Processing Systems 31},

pages = {2451--2463},

year = {2018},

publisher = {Curran Associates, Inc.},

url = {https://papers.nips.cc/paper/7512-recurrent-world-models-facilitate-policy-evolution},

note = "\url{https://worldmodels.github.io}",

}

Open Source Code

The instructions to reproduce the experiments in this work is available here.

Reuse

Diagrams and text are licensed under Creative Commons Attribution CC-BY 4.0 with the source available on GitHub, unless noted otherwise. The figures that have been reused from other sources don’t fall under this license and can be recognized by the citations in their caption.

Appendix

In this section we will describe in more details the models and training methods used in this work.

Variational Autoencoder

We trained a Convolutional Variational Autoencoder (ConvVAE) model as our agent's V. Unlike vanilla autoencoders, enforcing a Gaussian prior over the latent vector zt also limits the amount of information capacity for compressing each frame, but this Gaussian prior also makes the world model more robust to unrealistic zt∈RNz vectors generated by M.

In the following diagram, we describe the shape of our tensor at each layer of the ConvVAE and also describe the details of each layer:

Convolutional Variational Autoencoder

Convolutional Variational Autoencoder

Our latent vector zt is sampled from a factored Gaussian distribution N(μt,σt2I), with mean μt∈RNz and diagonal variance σt2∈RNz. As the environment may give us observations as high dimensional pixel images, we first resize each image to 64x64 pixels and use this resized image as V's observation. Each pixel is stored as three floating point values between 0 and 1 to represent each of the RGB channels. The ConvVAE takes in this 64x64x3 input tensor and passes it through 4 convolutional layers to encode it into low dimension vectors μt and σt. In the Car Racing task, Nz is 32 while for the Doom task Nz is 64. The latent vector zt is passed through 4 of deconvolution layers used to decode and reconstruct the image.

Each convolution and deconvolution layer uses a stride of 2. The layers are indicated in the diagram in Italics as Activation-type Output Channels x Filter Size. All convolutional and deconvolutional layers use relu activations except for the output layer as we need the output to be between 0 and 1. We trained the model for 1 epoch over the data collected from a random policy, using L2 distance between the input image and the reconstruction to quantify the reconstruction loss we optimize for, in addition to KL loss.

Mixture Density Network + Recurrent Neural Network

For the M Model, we use an LSTM recurrent neural network combined with a Mixture Density Network as the output layer. We use this network to model the probability distribution of the next z in the next time step as a Mixture of Gaussian distribution. This approach is very similar to Graves' Generating Sequences with RNNs in the Unconditional Handwriting Generation section and also the decoder-only section of SketchRNN . The only difference in the approach used is that we did not model the correlation parameter between each element of z, and instead had the MDN-RNN output a diagonal covariance matrix of a factored Gaussian distribution.

To implement M, we use an LSTM LSTM recurrent neural network combined with a Mixture Density Network as the output layer, as illustrated in figure below:

MDN-RNN

MDN-RNN

We use this network to model the probability distribution of zt as a Mixture of Gaussian distribution. This approach is very similar to previous work in the Unconditional Handwriting Generation section and also the decoder-only section of SketchRNN . The only difference is that we did not model the correlation parameter between each element of zt, and instead had the MDN-RNN output a diagonal covariance matrix of a factored Gaussian distribution.

Unlike the handwriting and sketch generation works, rather than using the MDN-RNN to model the probability density function (pdf) of the next pen stroke, we model instead the pdf of the next latent vector zt. We would sample from this pdf at each time step to generate the environments. In the Doom task, we also use the MDN-RNN to predict the probability of whether the agent has died in this frame. If that probability is above 50%, then we set done to be True in the virtual environment. Given that death is a low probability event at each time step, we find the cutoff approach to be more stable compared to sampling from the Bernoulli distribution.

The MDN-RNNs were trained for 20 epochs on the data collected from a random policy agent. In the Car Racing task, the LSTM used 256 hidden units, in the Doom task 512 hidden units. In both tasks, we used 5 Gaussian mixtures, but unlike , we did not model the correlation parameters, hence zt is sampled from a factored mixture of Gaussian distributions.

When training the MDN-RNN using teacher forcing from the recorded data, we store a pre-computed set of μt and σt for each of the frames, and sample an input zt∼N(μt,σt2I) each time we construct a training batch, to prevent overfitting our MDN-RNN to a specific sampled zt.

Controller

For both environments, we applied tanh nonlinearities to clip and bound the action space to the appropriate ranges. For instance, in the Car Racing task, the steering wheel has a range from -1.0 to 1.0, the acceleration pedal from 0.0 to 1.0, and the brakes from 0.0 to 1.0. In the Doom environment, we converted the discrete actions into a continuous action space between -1.0 to 1.0, and divided this range into thirds to indicate whether the agent is moving left, staying where it is, or moving to the right. We would give C a feature vector as its input, consisting of zt and the hidden state of the MDN-RNN. In the Car Racing task, this hidden state is the output vector ht∈RNh of the LSTM, while for the Doom task it is both the cell vector ct∈RNh and the output vector ht of the LSTM.

Evolution Strategies

We used Covariance-Matrix Adaptation Evolution Strategy (CMA-ES) to evolve C's weights. Following the approach described in Evolving Stable Strategies, we used a population size of 64, and had each agent perform the task 16 times with different initial random seeds. The agent's fitness value is the average cumulative reward of the 16 random rollouts. The figure below charts the best performer, worst performer, and mean fitness of the population of 64 agents at each generation:

Training of CarRacing-v0

Training of CarRacing-v0

Since the requirement of this environment is to have an agent achieve an average score above 900 over 100 random rollouts, we took the best performing agent at the end of every 25 generations, and tested it over 1024 random rollout scenarios to record this average on the red line. After 1800 generations, an agent was able to achieve an average score of 900.46 over 1024 random rollouts. We used 1024 random rollouts rather than 100 because each process of the 64 core machine had been configured to run 16 times already, effectively using a full generation of compute after every 25 generations to evaluate the best agent 1024 times. In the figure below, we plot the results of same agent evaluated over 100 rollouts:

Histogram of cumulative rewards.

Histogram of cumulative rewards.

Average score is 906 ± 21.

We also experimented with an agent that has access to only the zt vector from the VAE, but not the RNN's hidden states. We tried 2 variations, where in the first variation, C maps zt directly to the action space at. In second variation, we attempted to add a hidden layer with 40 tanh activations between zt and at, increasing the number of model parameters of C to 1443, making it more comparable with the original setup. These results are shown in the two figures below:

When agent sees only zt, average score is 632 ± 251.

When agent sees only zt, average score is 632 ± 251.

When agent sees only zt, with a hidden layer, average score is 788 ± 141.

When agent sees only zt, with a hidden layer, average score is 788 ± 141.

DoomRNN

We conducted a similar experiment on the generated Doom environment we called DoomRNN. Please note that we did not attempt to train our agent on the actual VizDoom environment, but only used VizDoom for the purpose of collecting training data using a random policy. DoomRNN is more computationally efficient compared to VizDoom as it only operates in latent space without the need to render an image at each time step, and we do not need to run the actual Doom game engine.

In our virtual DoomRNN environment we increased the temperature slightly and used τ=1.15 to make the agent learn in a more challenging environment. The best agent managed to obtain an average score of 959 over 1024 random rollouts. This is the highest score of the red line in the figure below:

Training of DoomRNN

Training of DoomRNN

This same agent achieved an average score of 1092 ± 556 over 100 random rollouts when deployed to the actual DoomTakeCover-v0 environment, as shown in the figure below:

Histogram of time steps survived in the actual environment over 100 consecutive trials.

Histogram of time steps survived in the actual environment over 100 consecutive trials.

Average score is 1092 ± 556.